Saliency Maps

What is it?

A saliency map is a feature-based explainability (XAI) method that is available for gradient-based ML models. Its role is to estimate how much each variable contributes to the model prediction for a given data sample. In the case of NLP, variables are usually tokens or words.

Literature

In NLP, the current state-of-the-art models (Transformers) are black boxes. It’s not trivial to understand why they make mistakes or why they are right.

Saliency maps first became popular for computer vision problems, where the results would be a heatmap of the most contributing pixels. There has been recent growing interest in saliency maps as an XAI technique for NLP:

- Wallace et al.1 in their AllenNLP toolkit implement vanilla gradient, integrated gradients, and smoothGrad for several NLP tasks and mostly pre-BERT models.

- Han et al.2 use gradient-based saliency maps for sentiment analysis and NLI on BERT.

- Atanasova et al.3 evaluate different saliency techniques on a variety of models including BERT-based models.

- Bastings and Filippova4 argue for using saliency maps over attention-based explanations when determining the input tokens most relevant to a prediction. Their end-user is a model developer, rather than a user of the system.

Other XAI techniques

Apart from saliency maps, other feature-based XAI techniques exist, such as SHAP or LIME.

Where is this used in Azimuth?

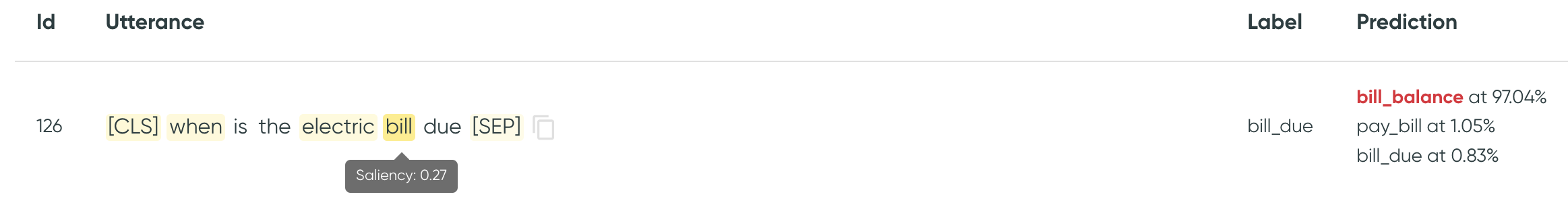

In Azimuth, we display a saliency map over a specific utterance to show the importance of each token to the model prediction. When available, it is both displayed in the Utterance Details and in the Utterances Table.

Saliency example

In this example, bill is the word that contributes the most to the prediction bill_balance.

How is it computed?

We use the technique Vanilla Gradient, shown to satisfy input invariance

in Kindermans et al.5 We simply backpropagate the

gradient to the input layer of the network: in our case, the word-embedding layer. We then take

the L2 norm to aggregate the gradients across all dimensions of the layer to determine the saliency

value for each token.

Saliency maps are only supported for certain models

Saliency maps are only available for models that have gradients. Additionally, their input layer needs to be a token-embedding layer, so that the gradients can be computed per token. For example, a sentence embedder cannot back-propagate the gradients with sufficient granularity in the utterance.

Configuration

Assuming the model architecture allows for saliency maps, the name of the input layer needs to be defined in the config file, as detailed in Model Contract Configuration.

-

Wallace, Eric, et al. "Allennlp interpret: A framework for explaining predictions of nlp models." arXiv preprint arXiv:1909.09251 (2019). ↩

-

Han, Xiaochuang, Byron C. Wallace, and Yulia Tsvetkov. "Explaining black box predictions and unveiling data artifacts through influence functions." arXiv preprint arXiv:2005.06676 (2020). ↩

-

Atanasova, Pepa, et al. "A diagnostic study of explainability techniques for text classification." arXiv preprint arXiv:2009.13295 (2020). ↩

-

Bastings, Jasmijn, and Katja Filippova. "The elephant in the interpretability room: Why use attention as explanation when we have saliency methods?." arXiv preprint arXiv:2010.05607 (2020). ↩

-

Kindermans, Pieter-Jan, et al. "The (un) reliability of saliency methods." Explainable AI: Interpreting, Explaining and Visualizing Deep Learning. Springer, Cham, 2019. 267-280. ↩